In a recent breakthrough, a team from Moscow State University (MSU) and Huawei's research center have successfully exploited a vulnerability in the widely used Face ID system. The method employed was as simple as it was ingenious: they used a color sticker, printed and affixed to a hat.

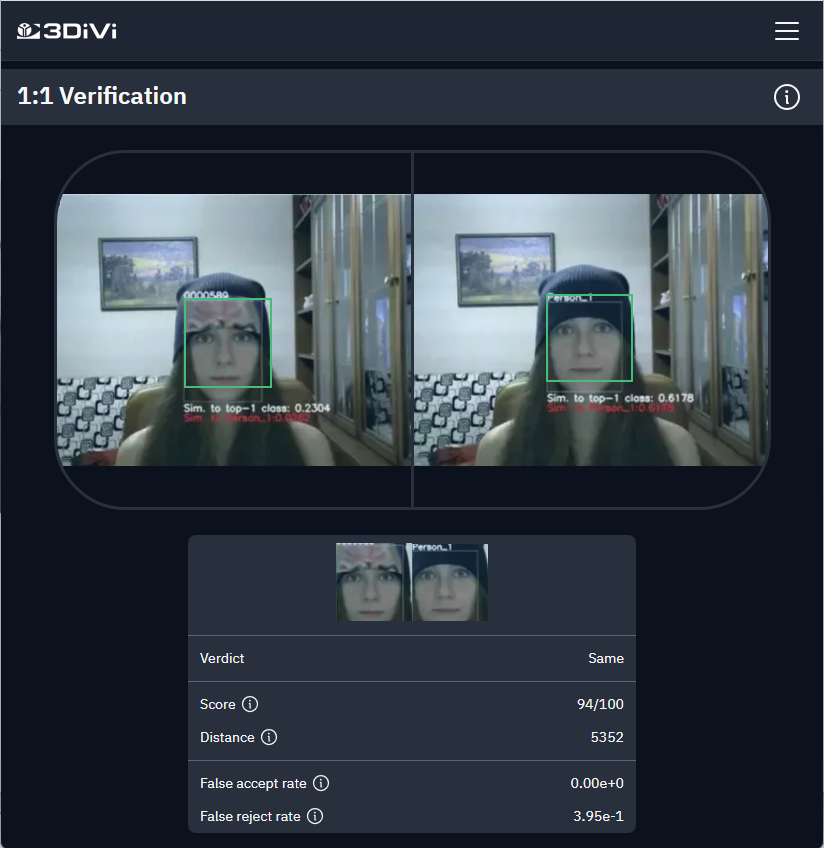

Under normal circumstances, this system is proficient in recognizing faces, effortlessly identifying Person_1. However, the introduction of a specially designed color sticker disrupts the system's ability to perform its identification task.

The attack on the AI system utilizes an image measuring 400×900 pixels. The sticker features a raised eyebrow, a design choice informed by previous research that suggests eyebrows are a crucial feature in human face recognition.

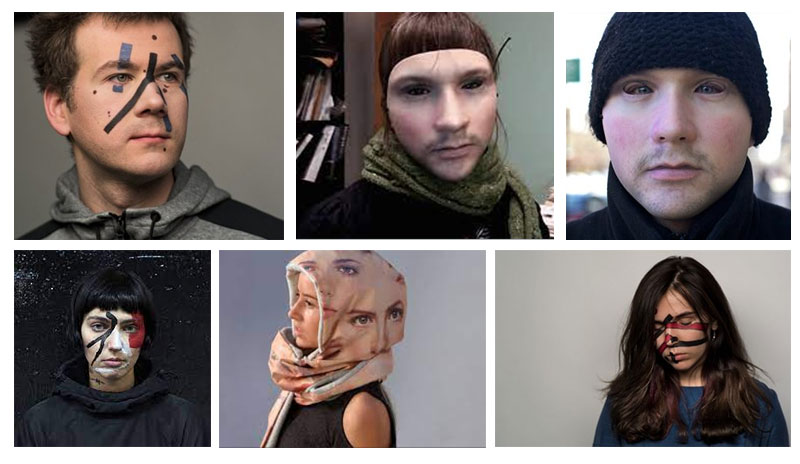

This incident brings to mind a similar recent study conducted by the University of Leuven in Belgium. In this case, the open-source object recognition system, YOLO, was deceived by an "adversarial patch" in the form of a piece of colored cardboard.

One might be tempted to think that for those wishing to deceive the system, there is no longer a need for elaborate makeup or silicone masks. However, it's important to note that not all systems are as easily fooled.

For instance, the algorithms developed by 3DiVi are not so easily compromised. This highlights the importance of using tried and tested solutions in the face recognition field.

These incidents underscore the ongoing challenges in the field of face recognition and the need for continuous research and development. As adversarial attacks become more sophisticated, so too must the systems designed to counter them. The race is on to develop face recognition systems that can withstand these novel forms of attack, ensuring the security and reliability of this crucial technology.