The journey of automated facial recognition systems began in the 1960s, pioneered by Woody Bledsoe, Helen Chan Wolf, and Charles Bisson. Their initial system, albeit innovative, necessitated manual intervention. Administrators had to manually identify facial features such as the eyes, ears, nose, and mouth in photographs. The system then calculated distances and ratios from these features to a common reference point, which were subsequently compared to reference data.

Fast forward to the mid-1970s, Goldstein, Harmon, and Lesk introduced automation to the process. They utilized 21 specific subjective markers, including hair color and lip thickness, to facilitate recognition.

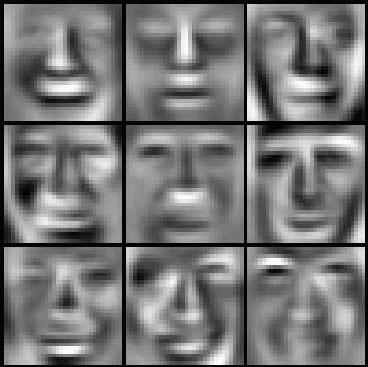

The Eigenface approach, a groundbreaking method for facial recognition, emerged in 1987, developed by Sirovich and Kirby. This approach was later adopted by Matthew Turk and Alex Pentland in 1991 for face classification. The Eigenface method transformed facial images into a compact set of characteristic pictures, or eigenpictures, which were then used to reconstruct the face.

Eigenfaces, a set of eigenvectors, were instrumental in addressing the computer vision problem of human face recognition. The concept, initially developed by Sirovich and Kirby in the mid-1960s, was later utilized by Matthew Turk and Alex Pentland for face classification. Eigenfaces formed a basis set of all images used to construct the covariance matrix, resulting in dimension reduction. This allowed a smaller set of basis images to represent the original training images.

A brief historical overview: The Eigenface approach originated from the quest for a low-dimensional representation of face images. Sirovich and Kirby demonstrated that principal component analysis could be applied to a collection of face images to form a set of basis features. These basis images, known as eigenpictures, could be linearly combined to reconstruct images in the original training set.

The practical implementation of Eigenfaces involves several steps: preparing a training set of face images, subtracting the mean, calculating the eigenvectors and eigenvalues of the covariance matrix, and selecting the principal components.

The technique employed in creating Eigenfaces and using them for recognition has found applications beyond face recognition. It is used in handwriting recognition, lip reading, voice recognition, sign language and hand gesture interpretation, and medical imaging analysis.

Eigenfaces offer a straightforward and cost-effective solution for face recognition. The training process is fully automated and easy to code. Once the eigenfaces of a database are calculated, face recognition can be achieved in real time.

However, it's crucial to acknowledge that the Eigenface method has its limitations. It is highly sensitive to lighting, scale, and translation, and requires a strictly controlled environment. It also struggles to accurately capture changes in expressions.