Face recognition is becoming a cornerstone of urban safety, but deploying these systems is far from straightforward. While many believe high-resolution cameras are the solution, the reality is far more complex. Hidden factors often undermine identification accuracy and speed.

I'm Mikhail, Product Manager for Video Analytics at 3DiVi. To identify the factors affecting facial recognition accuracy, we ran multi-season tests in three cities, testing real-life scenarios like public safety monitoring and access control.

The tests, carried out from November 2023 to July 2024, spanned four seasons and three cities, analyzing various camera setups on city streets under challenging conditions such as low light, adverse weather, vibrations, and more. Key findings included the impact of camera resolution, angles, mounting locations, and system architecture (centralized vs. edge processing), with 1,056 identification attempts made against a database of 528,000 faces.

Based on these findings, I’d like to share 14 key factors that influence face recognition system performance and how to address them through the right equipment, setup, and camera placement.

These factors fall into two key categories:

1️⃣ Internal – system-level influences

2️⃣ External – environmental and operational challenges

Internal Factors

1. Network Bandwidth

Impact on successful identification: Low (4 losses out of 1,056 identification attempts)

Existing city infrastructure often cannot handle large volumes of video data from numerous cameras to the data center. This can lead to dropped frames or short-term video freezes. In some cases, entire sequences of people passing by were lost.

Existing city infrastructure often cannot handle large volumes of video data from numerous cameras to the data center. This can lead to dropped frames or short-term video freezes. In some cases, entire sequences of people passing by were lost.

2. Hardware Stability

Impact on successful identification: Significant (11 losses out of 1,056 identification attempts)

When transmitting a video stream from the camera to video analytics servers, the data passes through several devices: the camera itself, a POE switch, switches to the data center, a video recording server, a video analytics server, and a server for storing facial vectors and identification results. Any of this hardware can fail at the precise moment the target individual appears in the camera's field of view and looks in its direction.

When transmitting a video stream from the camera to video analytics servers, the data passes through several devices: the camera itself, a POE switch, switches to the data center, a video recording server, a video analytics server, and a server for storing facial vectors and identification results. Any of this hardware can fail at the precise moment the target individual appears in the camera's field of view and looks in its direction.

3. Camera Resolution

Impact on successful identification: Significant (22 losses out of 1,056 identification attempts)

High-resolution cameras tempt users to cover larger areas. This reduces the relative size of faces, introduces distortions near frame edges, and decreases overall image quality. As the resolution increases, the cost of the camera rises, along with additional expenses for the infrastructure required to transmit the video stream to the data center and for storage systems.

High-resolution cameras tempt users to cover larger areas. This reduces the relative size of faces, introduces distortions near frame edges, and decreases overall image quality. As the resolution increases, the cost of the camera rises, along with additional expenses for the infrastructure required to transmit the video stream to the data center and for storage systems.

4. Camera Sensor Quality

Impact on successful identification: High (27 losses out of 1,056 identification attempts)

As a person moves within the camera's field of view, the system tracks their face and selects the best image based on criteria such as angle, rotation, blur, interocular distance, lighting, and more. Between 15 to 30 frames per second are analyzed over several seconds. Budget cameras often produce low-quality images with noise. As a result, these images may be discarded by quality assessment algorithms, and better frames might not be available.

As a person moves within the camera's field of view, the system tracks their face and selects the best image based on criteria such as angle, rotation, blur, interocular distance, lighting, and more. Between 15 to 30 frames per second are analyzed over several seconds. Budget cameras often produce low-quality images with noise. As a result, these images may be discarded by quality assessment algorithms, and better frames might not be available.

The sensors of cheaper cameras may fail sooner by "burning out" in the sun, which would introduce additional noise and blurring to the image, rendering the camera useless for face recognition tasks.

5. Video Analytics Server Performance

Impact on successful identification: High

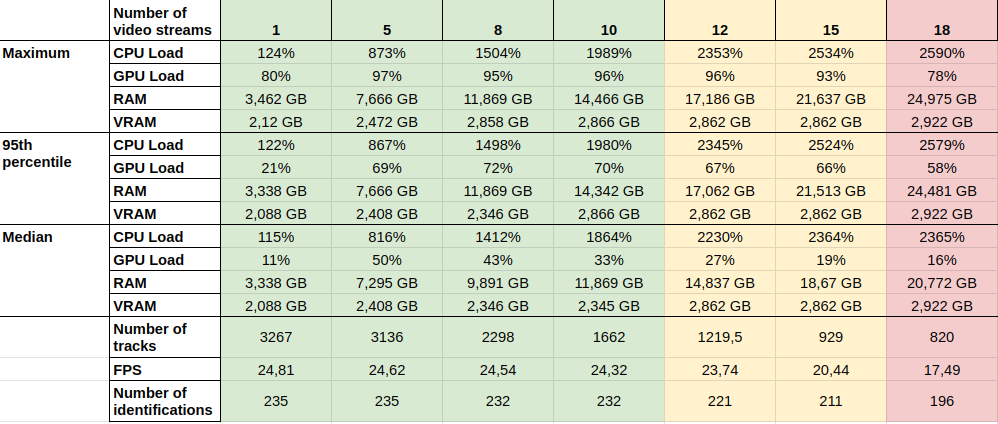

The higher the resolution, number of people, and video streams, the greater the load on computing resources. For instance, our video analytics system Omni Platform is designed to prevent crashes during peak loads. Instead of shutting down completely, the system begins skipping some frames submitted for analysis.

While this maintains overall functionality, it can result in optimal face angles being excluded from analysis. As shown in the table below, increasing the number of video streams per server eventually reduces the FPS (frames per second) from 25 to 17, leading to a drop in identifications from 235 to 196.

The higher the resolution, number of people, and video streams, the greater the load on computing resources. For instance, our video analytics system Omni Platform is designed to prevent crashes during peak loads. Instead of shutting down completely, the system begins skipping some frames submitted for analysis.

While this maintains overall functionality, it can result in optimal face angles being excluded from analysis. As shown in the table below, increasing the number of video streams per server eventually reduces the FPS (frames per second) from 25 to 17, leading to a drop in identifications from 235 to 196.

6. Quality of Reference Photos

Impact on successful identification: High (31 false matches out of 1,056 identification attempts)

Low-quality reference photos in the database can lead to false positives.

Low-quality reference photos in the database can lead to false positives.

If the reference photos in the database, which are used to build vectors for subsequent facial recognition, are of low quality, the system will produce more false matches.

To address this, 3DiVi team developed a proprietary service that estimates image quality for suitability in identification tasks.

The assessment is based on 19 parameters defined by the ISO/IEC 29794-5 + ICAO Photo Guidelines. Experience shows that if the database contains faces with a quality score of 80 or higher (NIST Visa/Border) and the system captures images from video with a quality score of 40 or higher (NIST Mugshot/Wild), the system will perform reliably and produce accurate identifications.

The assessment is based on 19 parameters defined by the ISO/IEC 29794-5 + ICAO Photo Guidelines. Experience shows that if the database contains faces with a quality score of 80 or higher (NIST Visa/Border) and the system captures images from video with a quality score of 40 or higher (NIST Mugshot/Wild), the system will perform reliably and produce accurate identifications.

Best Practices for Managing Internal Factors

✅️ High resolution from the camera is not as crucial. It is better to use a specialized long focus camera with lower output resolution, but ensure large faces in the frame. This will reduce the network bandwidth requirements, disk space for storing video, and server capacity for video analytics.

✅️ Video should be processed at the edge (directly at the intersections where cameras are installed) using specialized edge devices instead of transmitting a "rich stream" to the data center. This reduces the risk of identification losses due to transmission failures, decreases costs for building and maintaining communication lines and switching equipment, and reduces the need for video storage in the data center.

✅️ Video should be processed at the edge (directly at the intersections where cameras are installed) using specialized edge devices instead of transmitting a "rich stream" to the data center. This reduces the risk of identification losses due to transmission failures, decreases costs for building and maintaining communication lines and switching equipment, and reduces the need for video storage in the data center.

In 2024, we launched our own production of dust- and water-resistant 3DV-EdgeAI-32 computing units. During testing, we also used an insulated enclosure with an Nvidia computing unit.

✅️ The quality of the source photos in the database should be above 80 (you can check this with our online quality assessment tool). Otherwise, the likelihood of false identifications or misses increases.

✅️ Special services do not have the resources to address false identifications, so the principle of "if people weren't recognized on this camera, recognize them on another" helps reduce false positives and prevent discrediting the system.

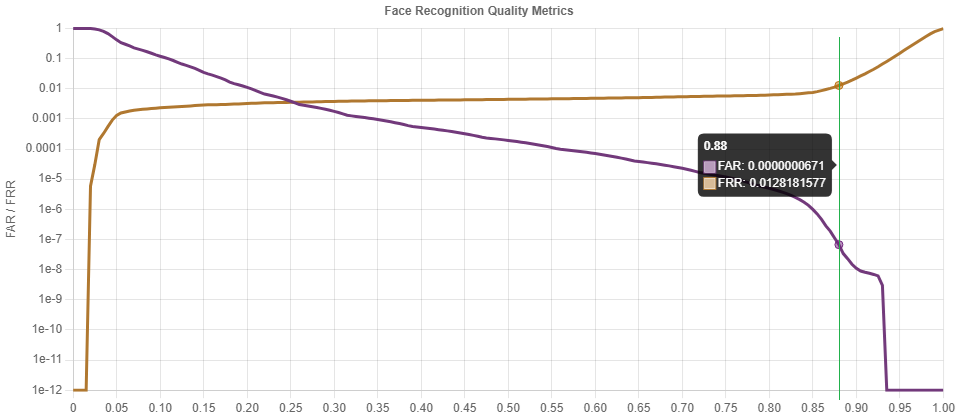

The recommended confidence threshold for identification is set to reduce false positives in databases with over 500,000 faces. It is essential to assess how many people will appear in front of the camera daily and determine how many false positives users are willing to handle.

Using the FAR/FRR (False Acceptance Rate / False Rejection Rate) graph, the optimal threshold can be chosen. For example, in our system, this threshold is set at 87.6%.

✅️ Special services do not have the resources to address false identifications, so the principle of "if people weren't recognized on this camera, recognize them on another" helps reduce false positives and prevent discrediting the system.

The recommended confidence threshold for identification is set to reduce false positives in databases with over 500,000 faces. It is essential to assess how many people will appear in front of the camera daily and determine how many false positives users are willing to handle.

Using the FAR/FRR (False Acceptance Rate / False Rejection Rate) graph, the optimal threshold can be chosen. For example, in our system, this threshold is set at 87.6%.

External Factors

7. Vibration (Wind, Vehicle Movement)

Impact on successful identification: Low (3 errors out of 1056 identification attempts).

The most significant vibrations and shakes were observed on tram supports during vehicle passage; however, these had minimal impact on overall performance. The system processes 25 frames per second, so the shake does not cause significant blurring of the image.

Our general recommendation is that image displacement should not exceed 1% of the frame size.

Our general recommendation is that image displacement should not exceed 1% of the frame size.

8. Weather Conditions (Rain, Fog, Snow)

Impact on successful identification: Low (7 errors out of 1056 identification attempts).

During daylight or with sufficient lighting (>200 lux), snow and rain cause image noise comparable to the natural noise from camera matrix.

The situation worsens in low-light conditions when precipitation and airborne particles amplify the impact of glare from backlighting.

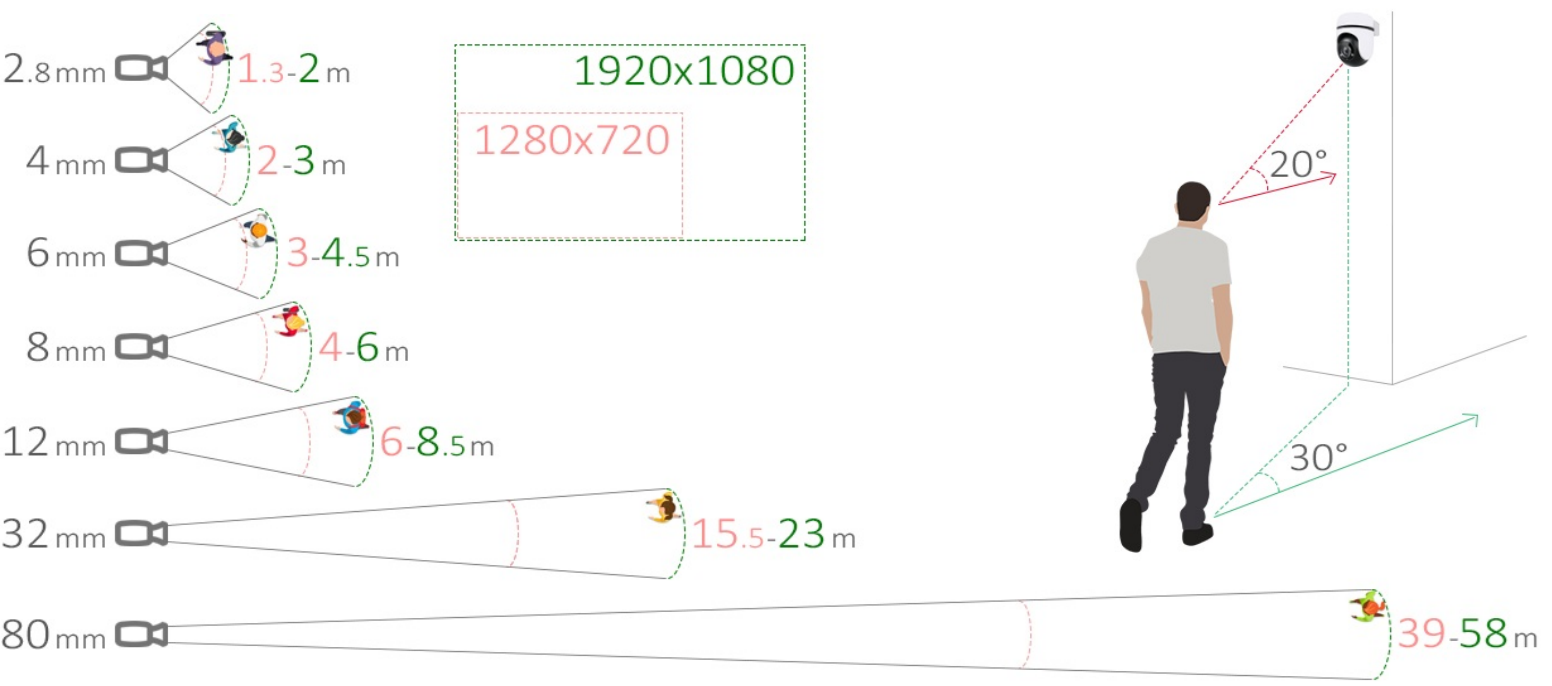

9. Distance to Target

Impact on successful identification: Significant (9 losses out of 1,056 attempts).

Long-focus cameras are necessary to ensure face identification at a distance. This may be required to reach an optimal identification zone (for example, where people are stationary and waiting for a green light) or to compensate for the camera's excessive tilt, especially when it needs to be raised above 2.5 meters.

As the distance to the identification point increases, the impact of the two previous factors (precipitation, airborne particles, shaking, and vibrations) begins to grow significantly.

Long-focus cameras are necessary to ensure face identification at a distance. This may be required to reach an optimal identification zone (for example, where people are stationary and waiting for a green light) or to compensate for the camera's excessive tilt, especially when it needs to be raised above 2.5 meters.

As the distance to the identification point increases, the impact of the two previous factors (precipitation, airborne particles, shaking, and vibrations) begins to grow significantly.

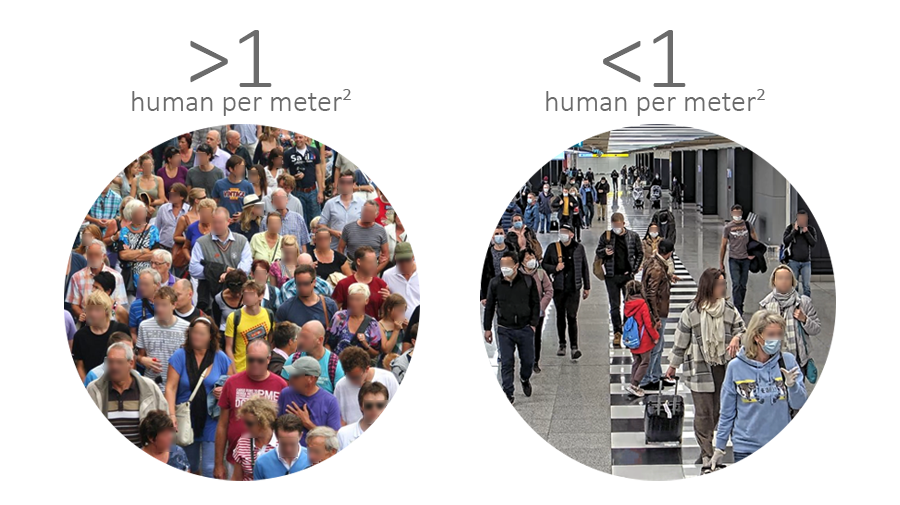

10. Crowd Density

Impact on successful identification: High (26 errors out of 1056 identification attempts).

In dense crowds, there are more obstructions and occlusions, which increases the chance of missing favorable frames with a clear face image:

- The person is looking in the direction of the camera

- The face is in focus, and the image is not blurred due to active movement

- The face is free from excessive noise, artifacts, and interference, such as snowflakes, rain, hair, cigarette smoke, etc.

The optimal crowd density is no more than one person per square meter.

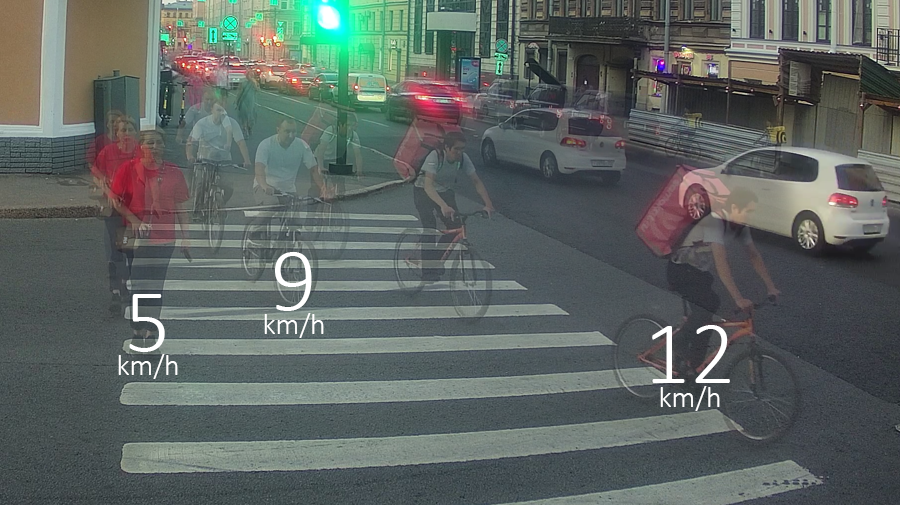

11. Speed of Movement

Impact on successful identification: High (28 errors out of 1056 identification attempts).

The movement speed of people should be ≤ 5 km/h. This means that individuals running, riding a scooter/bicycle, etc., may be missed. If the face shifts more than its own size between two consecutive frames, this can cause excessive motion blur and a loss of tracking.

12. Backlighting (Sunlight, Reflections)

Impact on successful identification: Critical (37 errors out of 1056 identification attempts).

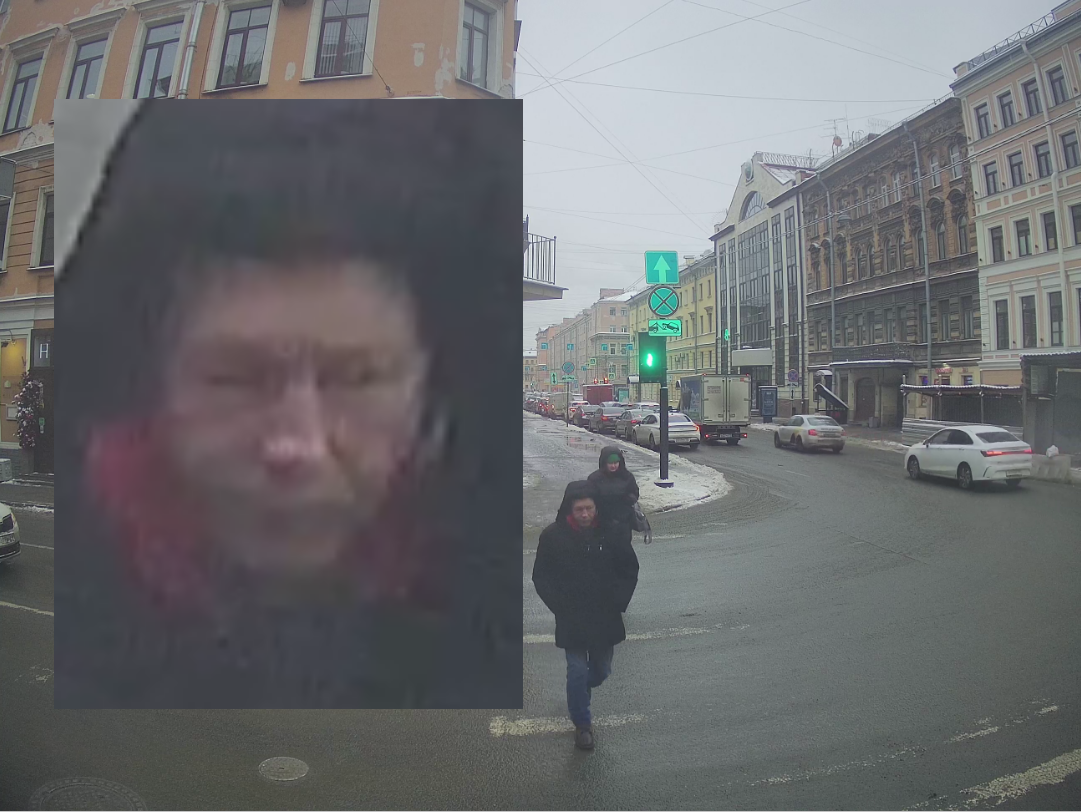

Backlighting during morning hours, reflections on wet asphalt, or glare from ice may not last long and might seem like a minor issue relative to the overall camera operation throughout the day or year. However, we lost a significant number of identifications during testing due to this factor.

This is the image from the camera facing southeast at 11:00 AM.

This is the image from the camera facing southeast at 11:00 AM.

And this is the image from the same camera 30 minutes later.

Similarly, fog at night can worsen the impact of glare from traffic lights, and passing vehicles, rendering facial images unsuitable for identification.

13. Camera Angle and Position

Impact on successful identification: Critical.

People tend to look down at their feet or at their phones. When the camera is placed too high, the chances of capturing a frontal facial image, which is ideal for identification, decrease significantly. If the camera is positioned too far from the main flow of movement, the risk of blurriness increases.

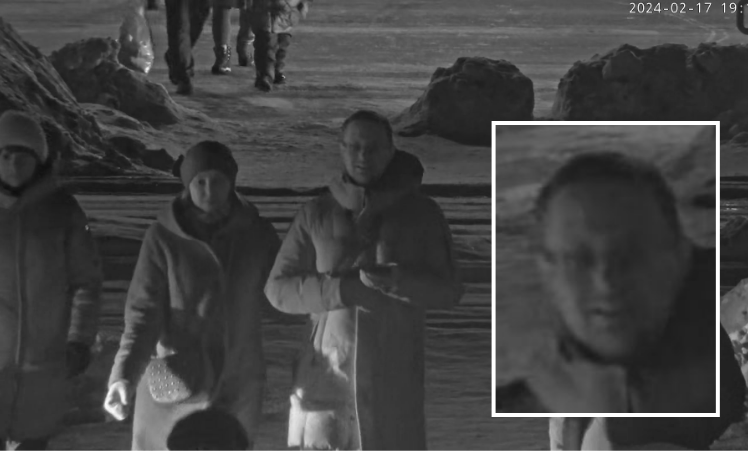

14. Illumination Level (<200 lux)

Impact on successful identification: Critical (98 losses out of 1056 attempts).

Night tests without additional lighting in identification zones (apart from the existing standard city lighting, providing illumination of <50 lux) showed a threefold decrease in performance.

Best Practices for Managing External Factors

✅️ Recommended camera manufacturers (with the ability to use long focus lenses): AXIS, IDIS, Hikvision.

✅️ During installation, check for backlight depending on the cardinal direction in the morning and evening hours, as well as from advertising billboards, traffic lights, and street lamps.

✅️ Configure cameras for night conditions. Without special lighting, a significant portion of identifications will be lost due to insufficient lighting, particularly during the hours from 18:00 to 09:00 in winter, and 22:00 to 07:00 in summer. This can lead to a loss of up to 60% of identifications.

✅️ Avoid using panoramic cameras for identification. Ensure that the “identification spot” is not located in a “transit zone.” It’s better to replace the lens with a long focus one to capture faces in areas where people are standing, ensuring their faces appear in the correct angle range without exceeding the acceptable limits.

✅️Ensure the majority of faces captured by the cameras have a quality above 40 (for your convenience use our online quality assessment tool). Lower quality increases the risk of false identifications or misses.

✅️ During installation, check for backlight depending on the cardinal direction in the morning and evening hours, as well as from advertising billboards, traffic lights, and street lamps.

✅️ Configure cameras for night conditions. Without special lighting, a significant portion of identifications will be lost due to insufficient lighting, particularly during the hours from 18:00 to 09:00 in winter, and 22:00 to 07:00 in summer. This can lead to a loss of up to 60% of identifications.

✅️ Avoid using panoramic cameras for identification. Ensure that the “identification spot” is not located in a “transit zone.” It’s better to replace the lens with a long focus one to capture faces in areas where people are standing, ensuring their faces appear in the correct angle range without exceeding the acceptable limits.

✅️Ensure the majority of faces captured by the cameras have a quality above 40 (for your convenience use our online quality assessment tool). Lower quality increases the risk of false identifications or misses.

If you have any questions, drop me a message on Telegram! I’m always up for a chat about how face recognition can work for you. Let’s get in touch!